Kube-Prometheus-Stack And ArgoCD - How Workarounds Are Born

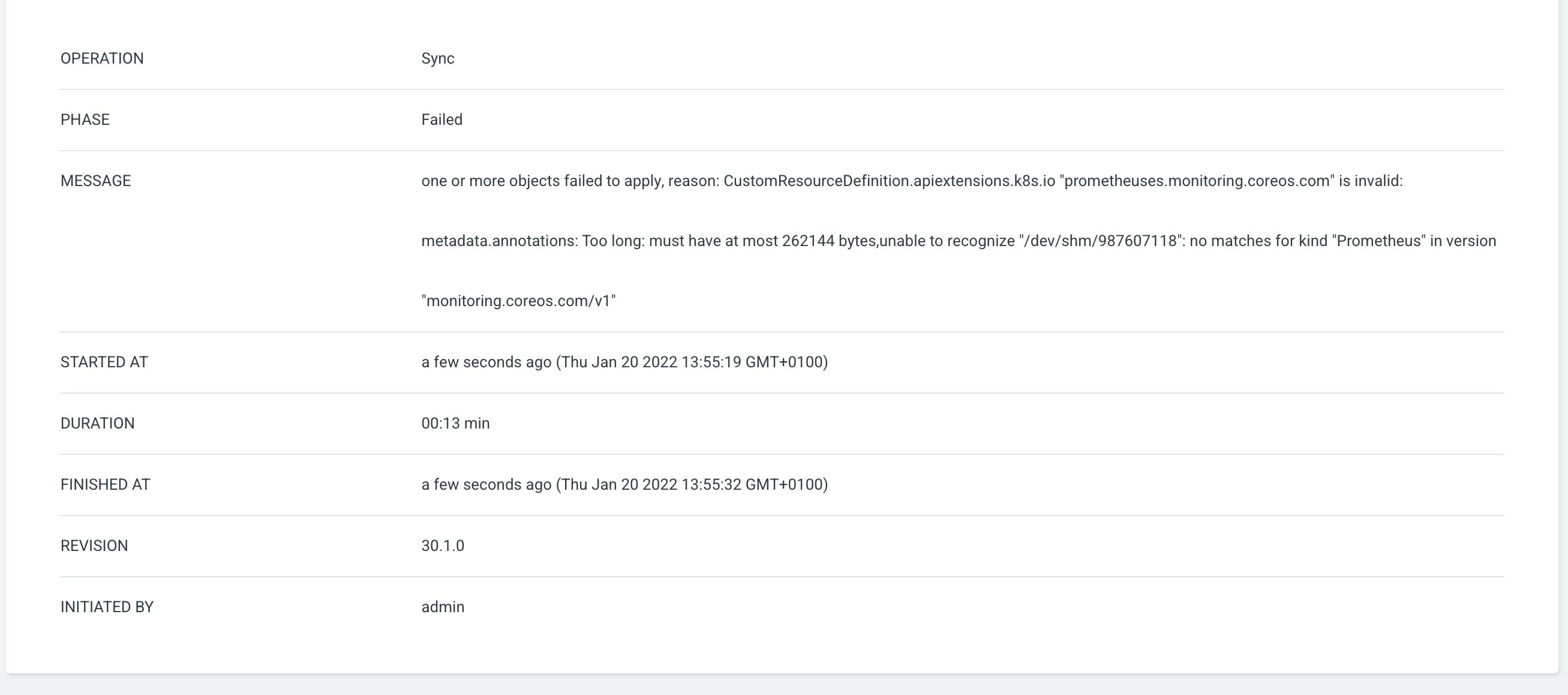

"prometheuses.monitoring.coreos.com" is invalid: metadata.annotations: Too long: must have at most 262144 bytes

Table of contents

!!Update!!

Please check this new article, if you use ArgoCD > 2.3 to not use the described workaround anymore!

Situation

Currently, I am part of a very huge and interesting project. While I worked on the installation of the kube-prometheus-stack in run full-speed into following strange error message:

one or more objects failed to apply, reason:

CustomResourceDefinition.apiextensions.k8s.io "prometheuses.monitoring.coreos.com"

is invalid: metadata.annotations: Too long: must have at most 262144 bytes,unable to

recognize "/dev/shm/987607118":

no matches for kind "Prometheus" in version "monitoring.coreos.com/v1"

After some very fruitless attempts to figure it, I asked Dr. Google for help.

I did found some GitHub issues, regarding this topic. Let me share, the one I took some of my ideas to create this workaround.

github.com/prometheus-community/helm-charts..

Workaround

The problem is, that ArgoCD use kubectl apply to deploy the manifests, which stores the entire manifest in an annotation. As a result you get big CustomResourceDefinition and voilà, it breaks!

The way I created my workaround now is: I create a kustomization file with following content:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-alertmanagerconfigs.yaml

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-alertmanagers.yaml

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-podmonitors.yaml

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-probes.yaml

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-prometheuses.yaml

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-prometheusrules.yaml

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-servicemonitors.yaml

- https://raw.githubusercontent.com/prometheus-community/helm-charts/kube-prometheus-stack-30.1.0/charts/kube-prometheus-stack/crds/crd-thanosrulers.yaml

Using the remote locations to not need to download them. Take care to pin the version, to the currently used version of the kube_prometheus_stack helm chart.

Then I called the kustomize script, via a dedicated pipeline kube-prometheus-stack-workaround.yaml.

kubectl create -k controlplane/service/monitoring/kube-prometheus-stack/workaround/kube-prometheus-stack-crds

After this, I could then deploy the Helm Chart without a problem.

Le end

I hope this will be fixed soon, as I don't want to keep this workaround to long in my productive environment.