How to measure and monitor an inlets exit server on Oracle Cloud Infrastructure

With the new inlets v0.9.0 feature.

This is an update of the existing how-to article, about running an exit server on Oracles Cloud Infrastructure. -> If you missed it here is the link

That is the tweet, le raison d'etre for this how-to!

I am a "crazy" for all things monitoring related. I just could not miss this opportunity.

Lets us change the terraform code from the last time, to get ready for the upcoming major update of Inlets v0.9.0.

BTW: Alex Ellis covered all the upcoming new functionalities in his own blog post. So go and check this out.

What did I changed in the code? Nothing on the infrastructure part.

We still run on OCI, the only difference is that we now deploy in the cloud-init the Grafana agent to pump our data into the Grafana Cloud.

Here is the small addition in the startup skript. Check the github repo for in-depth details.

mkdir /grafana

tee /grafana/agent.yaml <<EOF

server:

log_level: info

http_listen_port: 12345

...

EOF

tee /etc/systemd/system/grafana-agent.service <<EOF

...

EOF

...

apt install -y unzip

curl -SLsf https://github.com/grafana/agent/releases/download/v0.18.2/agent-linux-amd64.zip -o /tmp/agent-linux-amd64.zip && \

unzip /tmp/agent-linux-amd64.zip -d /tmp && \

chmod a+x /tmp/agent-linux-amd64 && \

mv /tmp/agent-linux-amd64 /usr/local/bin/agent-linux-amd64

systemctl restart grafana-agent.service

systemctl enable grafana-agent.service

Grafana Cloud

What is the Grafana Cloud?

Grafana Cloud is a composable observability platform, integrating metrics, traces and logs with Grafana. Leverage the best open source observability software – including Prometheus, Loki, and Tempo – without the overhead of installing, maintaining, and scaling your observability stack.

And they offer a free tier, which is absolutely perfect for us to use with our inlets exit nodes. The free tier includes:

- 10,000 series for Prometheus or Graphite metrics

- 50 GB of logs

- 50 GB of traces

- 14 days retention for metrics, logs and traces

- Access for up to 3 team members

So go and create an account. I wait here.

Grafana Agent

Ok thats the last picture of agent Smith, we got the joke.

The Grafana agent collects observability data and sends it to Grafana Cloud. Once the agent is deployed to your hosts, it collects and sends Prometheus-style metrics and log data using a pared-down Prometheus collector.

The Grafana agent is designed for easy installation and updates. It uses a subset of Prometheus code features to interact with hosted metrics, specifically:

- Service discovery

- Scraping

- Write ahead log (WAL)

- Remote writing

Along with this, the agent typically uses less memory than scraping metrics using Prometheus. Yeah!

API Key

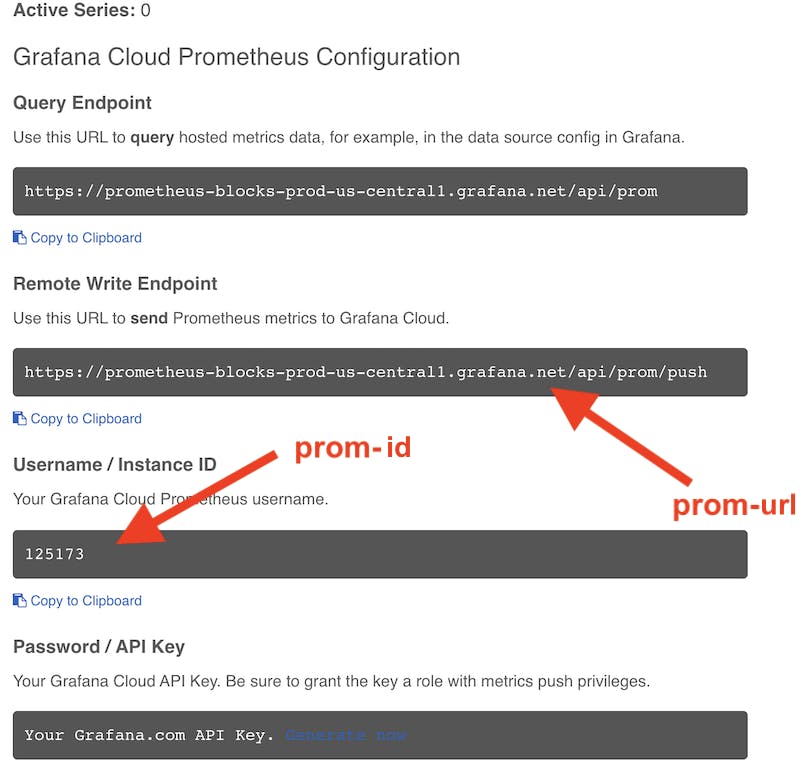

After you created an account, we need to get the values for the three new variables the updated terraform script needs:

prom-id = xxx

prom-pw = xxx

prom-url = xxx

Just follow this instruction grafana.com/docs/grafana-cloud/reference/cr.. to get the API key we going to use as prom-pw

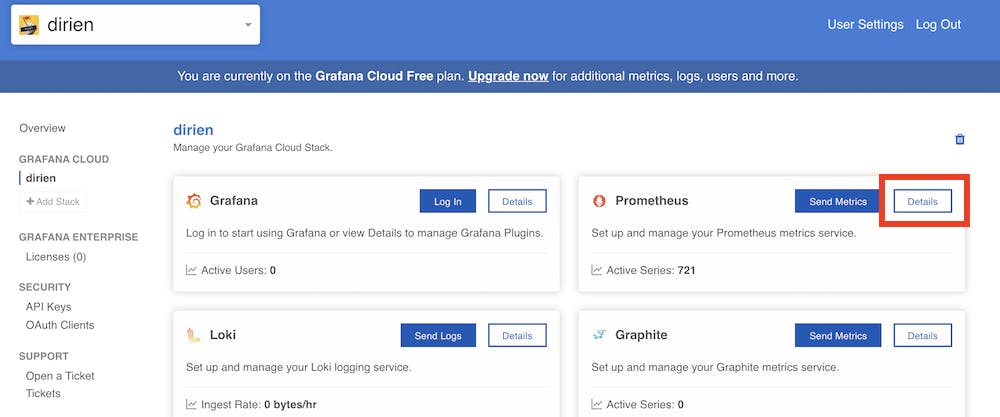

The prom-id and prom-url, we find both in the same spot in your account details page int the prometheus details:

Click on Details:

Grab the Remote Write Endpoint and Instance ID.

Agent Config.

Here is the full agent config, where are going to use. I will go more into the details, for the special points.

server:

log_level: info

http_listen_port: 12345

prometheus:

wal_directory: /tmp/wal

global:

scrape_interval: 60s

configs:

- name: agent

scrape_configs:

- job_name: 'http-tunnel'

static_configs:

- targets: [ 'localhost:8123' ]

scheme: https

authorization:

type: Bearer

credentials: ${authToken}

tls_config:

insecure_skip_verify: true

remote_write:

- url: ${promUrl}

basic_auth:

username: ${promId}

password: ${promPW}

integrations:

agent:

enabled: true

prometheus_remote_write:

- url: ${promUrl}

basic_auth:

username: ${promId}

password: ${promPW}

Inlets scraping config

...

scrape_configs:

- job_name: 'http-tunnel'

static_configs:

- targets: [ 'localhost:8123' ]

scheme: https

authorization:

type: Bearer

credentials: ${authToken}

tls_config:

insecure_skip_verify: true

...

This section is directly from the how-to of Alex I just substituted the credentials with a template variable.

remote-write config

...

remote_write:

- url: ${promUrl}

basic_auth:

username: ${promId}

password: ${promPW}

...

prometheus_remote_write:

- url: ${promUrl}

basic_auth:

username: ${promId}

password: ${promPW}

We're going to use the remote write functionality. That means the Grafana agent, will push any collected metrics, to our central Prometheus in the Grafana cloud. Keep this in mind, as this is a major difference to a classic prometheus installation you may have worked with.

I highly recommend you watch following YouTube video The Future is Bright, the Future is Prometheus Remote Write - Tom Wilkie, Grafana Labs.

That's it, with the new bits. Now we can deploy the whole stack via:

terraform apply --auto-approve

...

terraform output inlets-connection-string

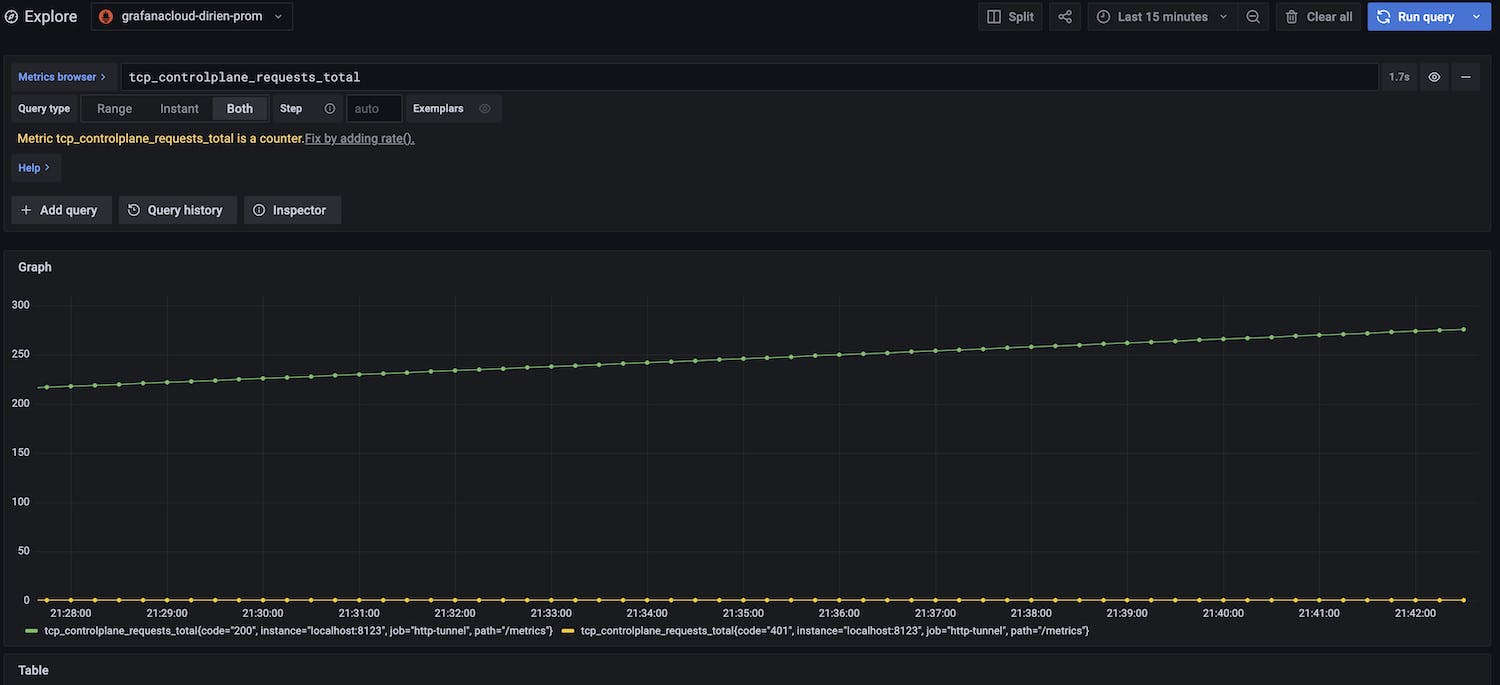

When you log into Grafana, you can now start to display your inlets metrics:

Use the how-to of Alex for all the available metrics.

- Check out the github repo -> github.com/dirien/inlets-oci-terraform

- Join the inlets slack channel.

- The official documentation -> inlets.dev

- Drop me DM in Twitter -> twitter.com/_ediri